AI & Data Science

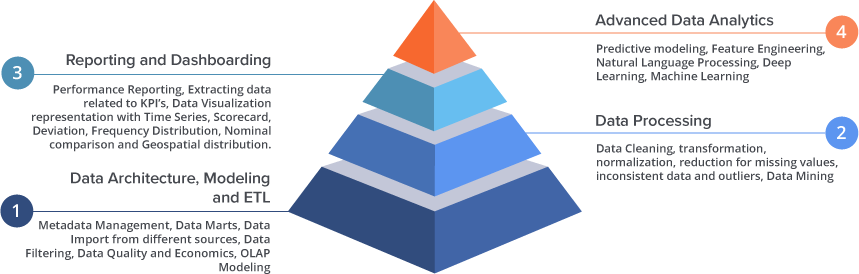

JMI is a leader in Data Science and AI based service offerings. The combination of our SME's, Engineers, Extensive homegrown IP, thorough knowledge of processes, tools and technologies provide us with a decisive advantage in solving our client's most complex problems.

Our engineers have helped numerous companies integrate with IBM Watson, Microsoft LUIS, and Amazon Lex, as well as build custom AI Engines with enhanced Deep Learning capabilities utilizing Google’s TensorFlow.